Giraffe360 Webinar #4-Understanding the Agent’s Need for Real Estate Media21113

Pages:

1

WGAN Forum WGAN ForumFounder and Advisor Atlanta, Georgia |

DanSmigrod private msg quote post Address this user | |

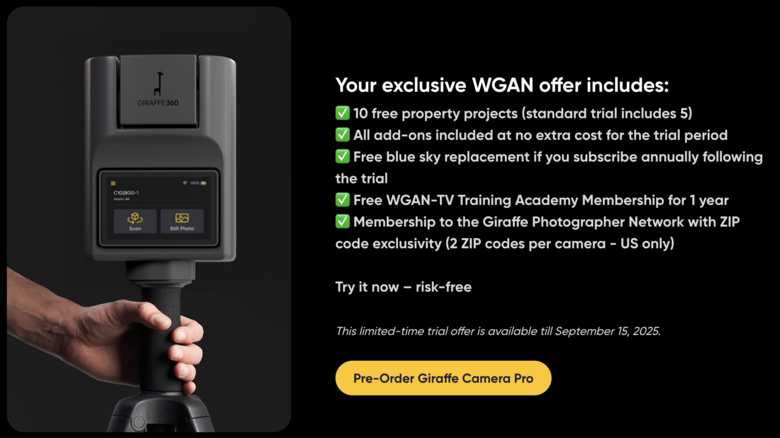

| Giraffe360 is a We Get Around Network Marketing Partner ---  Giraffe360 Webinar Giraffe360 Webinar #4 (12 August 2025) | Giraffe PRO Camera for Real Estate Media Providers | Guests: Giraffe360 Founder and CEO Mikus Opelts, Giraffe360 Chief Customer Officer Samy Jeffries and Giraffe360 Lead Product Manager Ainars Klavins | Tuesday, 12 August 2025 | Video courtesy of Giraffe360 YouTube Channel | @Amy @JanisSpogis | Try Giraffe360 for 60 days with 10 Residential Lists for $123 per month www.WGAN.info/giraffe360-pro  WGAN Exclusive Giraffe360 Pro Camera Offer (www.WGAN.info/giraffe-pro) Giraffe360 Webinar #4-Understanding the Agent’s Need for Real Estate Media Hi All, Giraffe360 hosts its fourth educational webinar designed to help real estate photographers evolve into full-service media partners. The Giraffe360 webinar features: ✓ Giraffe360 Founder & CEO Mikus Opelts ✓ Giraffe360 Chief Customer Officer Samy Jeffries ✓ Giraffe360 Lead Product Manager Ainars Klavins The three discuss the four eras of real estate media technology, agent expectations in today’s market, and a technical deep dive into SLAM (Simultaneous Localization And Mapping)—the “silent unlocker” behind AI-powered media creation. Key Takeaways ✓ Four eras of real estate media: From MLS books in the 1980s → DSLR boom and Zillow in the 2000s → immersive tools like Matterport and drones in the 2010s → to today’s AI-driven, unified media pipelines. ✓ Shift in buyer expectations: Millennials and Gen Z demand video, virtual tours, and lifestyle content—professional-grade media is no longer optional. ✓ Agent priorities: Survey data shows U.S. Realtors rank quality, speed, range of deliverables, and ease of booking as top factors when choosing a media partner. ✓ Expanding deliverables: HDR photos, floor plans, virtual tours, cinematic fly-throughs, and social-ready content are now expected as part of the standard listing package. ✓ SLAM technology: Accurate AI workflows depend on tracking. Giraffe PRO’s advanced sensor fusion (LiDAR, IMU, barometer, GPS) ensures consistent, scalable floor plans, tours, and videos. ✓ New releases: Virtual tour sky replacement, in-tour object removal, 3rd-gen AI editing, and improved Listing Spotlight outputs. ✓ Special offer for WGAN Community: Try the Giraffe PRO Camera for $123/month (60 days, 10 listings, all features included). Topics covered – Historical timeline of real estate media (1980s–today) – How buyer behavior drives agent media demands – Key survey insights from 1,000+ agents – Deliverables agents now expect as “standard” – Barriers to scaling full-service media and how AI removes them – Deep dive into SLAM, sensors, and data fusion powering accuracy – Best practices for capture and handling – New feature releases and upcoming roadmap Questions about the Giraffe PRO Camera? Best, Dan  WGAN Exclusive Giraffe360 Pro Camera Offer (www.WGAN.info/giraffe-pro) --- Transcript (video above) - Hello and welcome to the fourth webinar in our photographer and Real estate media partner webinar series, here at Giraffe360. I'm Samy Jeffries. For those who've joined before, you're probably used to seeing in my shiny head, I'm the CCO here at Giraffe. And I'm really excited to be back in the webinar seat on hosting duties again, for today's webinar. I'm joined by Mikus Opelts, our CEO. Hello Mikus, how are we today? --- Hey, Samy. I'm really good, really good. I'm quite excited about today's agenda. I want to talk about the four eras of real estate media technology, and really, and I think Janis' section on SLAM is super exciting. --- Brilliant. And so yeah, as Mikus mentioned there, we've also got Janis B., our camera technology product manager, and he lives from our facility in Riga, where our camera technology is designed, built, and tested. Hello, Janis. --- Hey guys. Cool to be back. I'm also very excited about today's webinar and yeah, curious to see what we have today for the rest of the crew. --- Great to have you back. So, as you saw on the title slide, the main topic for today's webinar is, Real Estate Media Production in an AI World. And we are going to explore how AI is transforming the way real estate media is produced and what that means for agents' needs right now. So we're going to start with that kind of virtual tour. Look back at what Mikus has kind of- the four eras of real estate media tech. And this is the major shift that has shaped how properties are now marketed. Then we'll move into a pitch on how to work with today's agents, what they want to see, with some really powerful data we've collected straight from agents, to understand what drives their decision making processes. And after that, a deep dive into SLAM, the behind the scenes system that powers accuracy, automation, and AI, in our media production process. And then finally a Q&A. And if you've got questions as we go, feel free to scan the QR code, head over to Slido, we will get to every single one of those questions at the end of the webinar. But before we get into the meat of today's agenda, just a brief update on the Giraffe PRO Camera release schedule. For those in the pre-order waiting list, an apology, we've pushed back the release of the Giraffe PRO Camera to September 2025, and the driving force behind this pushback is to get things perfectly right the first time. So, the cameras are being manufactured and calibrated, but there's just a few more edges we want to polish before we go live with the release. The first thing is the full AI content rendering speed. The new technology means new data to work with, some more updates to SLAM, and rapid AI rendering models. Secondly, the video outputs, a round of onsite testing has identified some core areas for improvement. And finally, the capture flow optimization. We need to find the exact sweet spot between the capture process and the flow to optimize the user experience and time investment, while making sure that it's aligned with the data requirements to deliver that full media suite. So just to restate, September 2025 is the new planned release for live testing and pre-orders, and October 2025 for that early bird list. Now over to you Mikus, to give us that virtual tour of the four eras. --- Yeah Samy thanks for setting us up, and just to comment on the delay. Yeah, apologies. We'll be around 60 days late from the originally set deadline, but I hope the wait will be worth it. And we're really pushing all the swings to make this technology groundbreaking for everyone in the market. But today I wanted to talk about the four eras of real estate media technology, kind of, and if we go down the memory lane, the history of this industry, back in the eighties, this real estate media, and real estate search was all an analog process. And it was going mostly through the MLS books, the printed books. And photography in the eighties mostly still was on film, and developed in labs. Then in the nineties, we switched to the first stages of digital real estate search. Realtor.com got launched, and in this decade around 2% of buyers were searching for properties online. What's interesting is that at the end of the decade, the term virtual tour got coined, which means the first remote property exploration. And we're still using the same vocabulary these days. The next decade I would characterize digital tool development. In the early 2000s, DSLR cameras became a thing, and made the transition to digital camera creations. And also portals like Redfin and Zillow got launched in 2004 and 2006, kind of signaling that there is no home for digital listings to sit, and that there are very specific internet destinations where home buyers are going to search for the portals. YouTube was launched in 2005, signaling the new era of video production. iPhones brought MLS and emails to our pocket. A fun fact for photographers is that, around 2008 HDR became popular, finally fixing the age-old problem at that point, fixing the bright windows and the dark rooms problem. Switching gears to the next decade, I would call this immersive tools age and it started with Matterport 3D tours being launched and that's 2013. 2014, the DJI Phantom was the first drone that really took off and allowed very easy drone footage capture. I remember before 2014 when I needed to do like an outside shot, there were pretty much two options. You either rented a helicopter, which is crazy expensive, or the second option was to hire a crane, like a lift, where you could go up and do a photo shoot from the sky. And when the DJI Phantom came out, it was a craze, suddenly it was so easy to do a photo shoot or film a video. I think another significant milestone is 2015 BoxBrownie, which signals the decoupling of real estate photography creation, decoupling from the person who is doing the photography is no longer needed to edit it. And BoxBrownie really made a good solution where you can access on demand, 24/7 available photo editing service and signal this big transition of images being edited in Southeast Asia, where photographers can be working in States or in Europe. Early AI tools started to pop up and they started to do lead scoring and some support chatbots. And in this age, around 90% of real estate searches happen online. So the big leap from the previous decade, from 50 to 90, which leads us to the fourth decade to the age we are in today. 99% of buyers have moved their search online, and they are starting the real estate journey with the search online. This decade started with the big challenge for all of us, COVID, completely changed our lives. But now in hindsight, it seems like COVID was also like a beta test of how a digital-first world was going to look. It really, the virtual tours and the remote workflows get accelerated in those couple of years, and we see them taking off after the COVID continues the run. And AI, big stage in 2023, ChatGPT comes out. We all get excited about the possibilities using an artificial intelligence at our fingertips. And I think it kind of sparks the imagination that's going to truly fuel this decade on how AI is changing the landscape, which brings us to today. And we want to, going ahead, coin a new discipline, and a real estate media technology, which is a convergence from these fragmented asset creations in a one, unified fully integrated workflow that delivers digital first listing journey. So, in a sense, we want to make a market shift from single asset creations where we talk about photography, video creations, floor planning, as these different technology streams, and different requirements, into one unified real estate media technology into one unified term, that we can describe the industry, and what we are doing. But that's on the tech. So it's been amazing actually to see how, when you are in the weeds on a yearly basis, think things doesn't change, but when you look on like, 5 to 10 year period, we see how, actually quickly the things have been developing and how much the industry has changed, which I think nicely segues into the consumer side. Maybe Samy, you want to talk about the consumer, and how the consumer has changed over the decades? --- Oh yeah. And I love seeing that timeline kind of laid out, because you can see, historically, like what were actually some of the critical advancements in technology that led to such a change in the way that the property is marketed. And also, it is hard to kind of understand exactly, well, is it the technology that's forcing the market, or is the tech trying to keep up with the market or vice versa? So, I think there's some really important questions you can ask yourself by seeing a timeline like that. But yes, 100%. We've seen how technology has evolved. And as you just mentioned, the other side of that story is how those advancements lead to shifting buyer expectations. And so, back when baby boomers were the main buyers, like a few photos and a short property description were enough to get them to a viewing, that was the job, right? Get this person through the door, get them excited on the day. And then we kind of go into generation X, where it's, okay, I want a little bit more detail. I want to see photos for each room. I want to know the sizes and layouts, and the property specs before I make the trip. But now we've seen a seismic shift in the habits of millennials and Gen Zs, right? It's about context, and on-demand access, like they're accustomed now to scrolling through highly curated, visual first content, on TikTok, Instagram, listing platforms. And they expect those experiences. They expect to see videos, tours, lifestyle photography, to help them imagine living there. And they're connecting with both the content as much as the specs, right? The scores, the neighborhood, the vibe, the work from home potential. And so, for them that professional grade, multi-format media is now the baseline. So yeah, I love to see all kinds of things laid out. And I think now is a good time for us to switch gears. So we've looked at that timeline, and so much happens in short periods, but we're all kind of working in this world and it's hard to see the wood for the trees, right? So, an important trend, you know, what is an important trend to keep on top of? Who's driving the requirements? Is it the market? Is it the agent? And what do actual agents who are our viewers, today's customers expect? So what we wanted to do is to run a large scale litmus test with agents as to what actually matters for them right now. We did a survey with a great pickup rate. We've heard from over a thousand realtors globally on a few key questions. And here's the results. So, first of all, we asked Realtors if they work with a media partner. In the US 60% said they always do. 23% said frequently, and only 17% said never. That means more than 8 out of 10 agents in the US are using professional media on a regular basis, and professional media providers on a regular basis. So, that's photographers, service providers. But interestingly in Europe, there is a bit of a shift there, the numbers are lower but still extremely significant, right? 30% always, 24% frequently. But that's half of the market working with a media partner, or photographer most of the time. So in most markets, professional media isn't nice to have, it's the norm. And the real question now for the agents isn't if they use it, but who and why they choose their partners to work with. --- Samy if I can add a point here, we see from our users we have in Europe and the US, the markets are, I would think the difference is in the fact that in America, agent is a very independent role. A real estate agent, yes, works in a brokerage, yes, receives a brokerage support, but is really an independent person that carries out lots of these tasks. Hence outsourcing photography, and using it with the media partner is a better modus operandi. Where in Europe we have these small offices where a real estate agent is an employee, hence the office manager provides different tools and thinks about the efficiency of the office and there is much more do it yourself model being prevalent. I would suggest that these are the inputs why we see some difference, when an agent decides to use a media partner, and when not. --- A hundred percent. And in the US, I think a really good way to sum it up is, it's more agency, as in like more autonomy and more commission, right? So, they've got that space to make those decisions for what's right for their business and what it is that they're trying to achieve. So, with that in mind, for the rest of the data, we've honed in on the responses from our US Realtors who've responded to the survey. So the next question that we asked is, what's important to an agent when choosing a media partner? And obviously at the top of this we see quality and price and that's no surprise at all. They've been the main decision drivers for years, but looking down the list gives us way more insight. So, speed of delivery is increasingly important, especially in those competitive markets, where agents want their listings to go live within hours, they've got a captive buyer who also needs to sell. The range of deliverables are also extremely important to agents. So floor plans, tours, and video are a key differentiator as they try to stand out in a very, very competitive landscape. They can't create a full marketing package for a property with just one content type. Now reliability and professionalism might sound super obvious, but in practice from agents we followed up on this, where they're about consistency. The agents really value knowing what it is they'll get every time. And when I followed up with agents, it's also been around that bedside manner as well. So, they're sending a third party into the property to represent their brand, and how that person interacts with the vendor can make or break, you know, a tricky relationship for that agent. And finally on the list we saw, not insignificant, but ease of booking. So, if the process is clunky, it's a strike against the partner no matter how good the end product is. And when we followed up with agents who answered with this as one of their primaries, we said that the ability to get the media capture booked in then and there, can help them get some deals over the line. So, if you are working with emails or WhatsApp only, or they've got to call you and wait for a response, it might be time to consider investing in a scheduling system, because if they can get that content capture booked in with the vendor there and then, it then just becomes about getting them to sign on the dotted line, and move forward with the process. So the next question we asked, taking into account the decision making factors for agents when choosing a media partner. This becomes very, very interesting. So we've asked them what assets they expect as standard from a media partner. HDR photography is a given, right? That's the baseline for almost everyone, but more than half of them also expect to see floor plans and virtual tours as a part of their package. So these aren't necessarily now premium extras, for a lot of agents they're part of what they expect to see on a core listing toolkit. Now, 40% are also expecting to see videos and over a quarter now look for social media ready content. So that's not just images that they can post on socials, that's prebuilt social media ready content they can hit their listings with, they've been designed to drive interaction and to stop that scroll as we've mentioned in previous webinars. So, even though that's a smaller figure, it's definitely worth watching as the property marketing has shifted into platforms like Instagram and TikTok. And we see in that kind of historical timeline, this is on fire now with the potential of the buy and sell, the audience is already kind of active and captivated on the social media platforms. So, what does all this tell us? It tells us that the demand is there, and the opportunity is there to capitalize. So, if we go into the next slide, we kind of know that a photographer or a media partner can grow their market share with agents by providing that full media package. Most photographers stop at the stills, you deliver everything in one visit, the HDR, floor plans, virtual tours, videos, and the marketing, by using a tool that allows you to implement all of that. This isn't about adding more work, it's about one capture delivering everything they want so they can instantly elevate the listings without coordination, without additional time investment, by working with that media partner, and you become the easiest way for an agent to upgrade their marketing. A lot of people here are probably thinking, like, what's this dude talking about? If it was this easy then it would be this easy, right? We see a number of reasons why it's really tough to be that one-stop shop and provide that kind of plethora of marketing content. Now, the first is that it's manual, right? It's editing, QA, and then processing. Most photographers and media partners are spending a lot of time checking files, fixing mistakes, exporting, eating times, it eats margins, and it makes scaling painful. Secondly, more deliverables is more post-production, right? If you add floor plans, videos, tours, drones, the package today, you're not just adding value, you are also adding hours delays. And how do you cost for that when it comes to, kind of, yeah, how do you cost that to your clients? And finally, is the accuracy and quality right? When it all depends on a human-led process, results can vary from project to project, especially as you scale, if you need to bring other people into your business, which makes it harder to competently pitch, and again to deliver at scale. So, sorry, that's a bit doom and gloom, right? But there is good news, that those kinds of barriers that we've just seen aren't set in stone. With the right tool sets they can be kind of, yeah, they can be eradicated, and that's where the opportunity gets exciting, right? Earlier in the timeline we looked at, in the four eras, we looked at the technology releases, and the follow up impacts they had on the market like the iPhone and like YouTube with video content and Zillow hitting the market. And AI is becoming a part of us every day. But how will it accelerate your photographer's potential to become a full media partner? And the way that it does it is it's handling repetitive stuff so that you can focus on high-value client work. So it's making that full media delivery possible without adding any time on site, or in post production, allowing to control the cost and time investment, and it keeps that quality consistent every time. So you can pitch it with confidence. --- Maybe, which kind of, I think kind of segues semi nicely as to then what's the- if we could try to summarize the benefits of the photographers who would work with AI. I would break them down in four key areas. The first is the production costs, and especially single asset production costs goes to zero. What I mean by that is like, the next photograph editing the 50 seconds, the 55th doesn't cost extra dollars. Whether you're rendering one or five videos, will no longer be as important. Like there's service costs and there's fixed costs, but the single asset production cost is going to zero. But today we live in a world where every asset costs money. The second I think is a turnaround time. Today's industry standard is the next day delivery. And typically it actually takes between one to three days to deliver media content from a photographer. But now with AI we see the shift, we can get it done in hours, now going to take a couple of hours, is the general sentiment. And then greater accuracy and consistency, because we can be more consistent. There's less manuality around different steps, which breeds a very huge high consistency from property to property, from asset to asset. And last, but I think definitely not least, is the new possibilities. And we spoke on the last webinar about the gaussian splat created videos about different- In the previous webinars we've shown different marketing automation workflows, how we create websites, how we create social media ready posts. And these all know possibilities starting to kind of, we are starting only to scratch the surface of what will be possible. But, I think that kind of leads us to the- To what probably is the main topic from today's perspective, what we wanted to cover from the technology side, what's the big unlocker for the AI to work? And we want to, we probably want to, zero in on these four letter SLAM, but Janis I think you're more competent to break it down than me and Samy here. We'll let you lead the way. --- Yeah. I mean first of all, like you said, AI is like a big topic, right? And we thought we need to unpack it a little bit to give background on insights and aspects that come with this challenge. And we see the benefit, the benefit is there. We see the timeline, the trend, it's not even a trend. It feels like an inevitable future and it's the future is already today. So we need to just figure out how we get there, right? So, we thought we could make a little bit of a risky move by going really deep into our technicality, or well technical aspect of how our camera works and how we think about technology that can support our, and in general industry's vision, how we think things will happen in the future. So today we thought we would talk about SLAM or tracking, which is quite an unusual topic. We usually talk about images, about scans, but there's a third element which is tracking that people actually don't know. It's super critical for this AI pipeline to work. So, SLAM in short stands for Simultaneous Localization And Mapping. Essentially it means that, while the camera is moving, it scans its surroundings, collects data, and this data is used to understand where the camera is at the given point. And this is a very kind of essential piece because if you look here, these are some of the full media package deliverables. And as Mikus and Samy said before, they're getting more and more, right? So we need to figure out in what rational and speedy way we can actually produce these assets. And it's for sure it's not a manual, kind of labor, is not really an answer. So, we know that, actually we need to figure out how to, we can produce these through AI, through automations, and yeah, sure there will be some quality control, but that's about it, how we think this can happen. And we believe that this is completely doable. For automations to work really there, in simple terms, we see three categories that need, in terms of data that needs to be plugged in. Needless to say the data needs to be kind of quite solid, high accuracy, high resolution. And as we have spoken so far, obviously it's visuals, it's panoramas, it's still, obviously it's 3D data, in our case in terms of point clouds. But there's also a tracking, and this is what we call, kind of a nickname, it's SLAMming, even though it's a bit more complicated, we'll go into that. But also a tracking because, without the tracking, the camera is not, cannot really communicate where, what happened, which direction, what facing, et cetera. And lastly, to produce this data, there's a capture moment, capture process. And as mentioned before, we show you could create a completely different flow for each asset, but that's just not feasible. Looking how busy the schedule is and how much gear there is. We really truly believe that there is a way to create this one single capture flow at the start of the journey, which then through the production and post-processing funnel creates all of these amazing assets. It's both faster, more practical. And this is basically the kind of how the game is changing. And I think it's worth mentioning already at this stage that yes, we talk about automations and AI, how it's going to change, and how it is already changing these asset production processes. But the capture actually is interesting. The moment of capture is still very connected with a photographer or PRO user, because they actually have the biggest impact on how good this data will be. How good the quality will be and the accuracy. Yes, we provide from our side the camera, which is a technology but it still requires a certain way to handle this technology. So, with that in mind, with this bigger picture in mind, let me bring you guys back a little bit, let's dive deeper into the technology that our camera is using. And, it might sound a bit technical, but we thought it'll be interesting for you guys to get- Also leave a feedback. For the next webinars we can unpack other segments of AI automation. But tracking is definitely one of the interesting ones. So, the most important, kind of component for the SLAM, really is our LiDAR. And how it works is, when you travel from A to B in the property, it constantly scans the environment. And this is done by LiDAR in this horizontal position and it tracks objects in this horizontal plane like visualized here. It's pretty amazing that it actually runs with like 3000 RPMs and each revolution is like 1 to 2000 points. So, it collects a lot of data. And why is this technology so good because it's pretty precise, all right? It can leverage the precision of what LiDAR is also. Because our LiDAR is also kind of a beast when it comes to 3D data capturing the scans. But we utilize the power also in this tracking environment. And, so that's all pretty nice. But it actually also has some kind of downsides, some technical downsides that is a cool challenge to solve really. One of the downsides, if you can just for a second, yeah, come back here. So one of the downsides, maybe some of you already are familiar with LiDAR and this 2D, kind of plane scanning, is that it really needs a vertical object at the LiDAR height to be there for the tracking to happen, if there's nothing there. For example, if you're outdoors, or for example if there's some moving objects, or there are not enough unique features like corridors, the LiDAR and the algorithms, by the way, here as well, post data is analyzed by AI and algorithms, they might get confused because it's not enough unique features, or there's nothing there. So, one thing for sure, even this high tech is not enough to give a full tracking capabilities. This is one of the reasons, by the way, SLAM is also using self-driving cars, home appliances, like these robotized cleaning kits. But, when it comes to self-driving cars, one of the reasons, it does take a while for them to develop, because the tracking is still tricky. And also in our case, we kind of figured out that we need to add support senses to the camera to make it more robust, and to make it more adaptable to these corner cases. And this is why we also have a bunch of more sensors. And just to give you guys a kind of insight into how much we have packed in this camera just for the tracking alone, there's also a couple of IMU. In short, they also at the same time track your position, your orientation, something that everyone's kind of familiar with, fitness trackers, phones, or mobile watches. And this assists LiDAR, in case LiDAR doesn't pick up the objects, we can still sense if the camera's moving in X, Y, Z. But IMU is not, it cannot be answered on its own, because you might think well, then just use IMU. But IMU has a tendency to drift, we call it drift. It tends to build up inaccuracies over time and this cannot work alone either. So, what we do is, it's called sensory fusion. We put these two kinds of data together, and together they kind of generate this accurate tracking. When it comes to detecting the height, we plug in a bit more specific sensor, which is a barometer, which basically detects the air pressure. And by that calculates if a user has gone to the second floor, I mean floor up or down. And this helps us to detect later for the floor plan generation, if there's a floor change, at this, it's another kind of layer of tracking that is also critical for our pipelines to know what happened in what position. And then if we move forward, we have even more metrics that we track, really. One of them is a kind of positioning like magnetometer and encoders basically, or motors either built in a camera, that helps the camera to rotate. These are helping us to detect the angle at any given time, to detect the north. So it works as a compass. This helps us also for the layout generation and general, another safety kind of net, to understand the orientation and positioning. And we also track the GPS locations that are basically for helping to understand where the property is, and to smooth some of the pipelines as well. So as you see, there's a lot of actual sensors, and we are working with sensory fusion to kind of make the tracking more accurate. And I think, next we can see how it kind of looks in reality. This is a little bit sped up, but this is how the SLAM looks like, from the kind of algorithm perspective. When all the data is collected, this is what AI sees really in a live setting. This, what you see is horizontal LiDAR lighting up or you detect the position of this space and this is how we map the location. Later on, this is the tracking data, where we know at which point and which angle the scans were done. And combining these two medias, the scans, the visuals, and the tracking, we can generate all of these assets that we mentioned before, most visually easy to link the asset, which is floor plan, which I think we can quickly show later. So, as I said before, on the left SLAM, this is how it roughly looks like, but it's still really rough, right? There's a lot of artifacts that we need to deal with. For example, SLAM doesn't understand if there's a mirror or a glass reflection. From the data perspective, hey, there's just another room. Congrats, your apartment is two times bigger than you thought. But it doesn't work like that. I mean, the floor plan is not going to look accurate. Well, it's not going to be accurate if we just allow it to go through. So we have a lot of compensating mechanisms, like we analyze panoramas and AI understands from the kind of machine learning, that hey, there's a mirror. So we compensate that, we filter the data, we clean up, and because we know already the arrangement of the scans and everything, we can do this kind of rough room size estimations, rough positioning, which is kind of base data, which then is transformed into floor plan, into virtual tours, et cetera. But the starting point still is, good tracking data, together with high accuracy scans and visuals, which are then kind of run through these algorithms automatically, without any kind of an ideal scenario without any kind of manual assistance. In the end state, the final asset is being quality checked, but that should be it in the ideal kind of scenario. Yeah, ... Mikus, Samy, do you guys have any questions so far on this? --- Yeah, so one thing that people always ask, and this kind of looking at the Giraffe GO Camera kind of, is how do we go from the scan to the floor plan, right? Like how does the cam, this is what people always ask me, right? How does the camera know where it is? Like they kind of want to understand how it happens. And my answer is obviously a lot shorter than this, but it is always that there's a multitude of different ways in which we detect data. And so, I guess the thing I wanted to ask you is, are these things stacked on top of each other in terms of redundancy? Is there space for one to fail, and then does kind of the rest of the picture still get put together if the barometer fails or that type of thing? --- There's definitely a lot of redundancy when it comes to live tracking. That's why we have these multiple level layers of sensors, and like, I'll also show some scenarios what happens if they don't work. But there are some fallback scenarios that we can work with and most, if not all cases, we have a way to fix things. Even if it was, if there was an error because of the technology or because of the user error, we have some ways to fix it, but the issue is there, it will take much, much longer than needed. Much, much longer sounds a lot, I mean usually it's like what, like a day, but in our standards a day is a lot, right? And the speed of delivery is what really matters, and what pushes us to look in a way and flow that, well, we don't have the option, we don't really have a day. When it comes to this floor plan generation, we actually use a lot of floor plans. If you talk about floor plans specifically, it's not just tracking and scans which might feel that way. Actually a lot of knowledge for the floor plan generation comes from panoramas, where our visuals are used by our AI algorithms to recognize corners, to recognize things like kitchen aisle or a bathroom, or specific items that we also later visualize in the floor plans as well. So what I'm saying is, technically we could generate floor plans just from point clouds alone, and we could match them together and we could also generate it that way without visuals, without tracking, but it would not be as efficient. So, the efficiency is where, which we get is by having this kind of sensory and data fusion together in floor plan case, panoramas, and 3D. Yeah, I'm not sure if my network is working, but yeah, that's covers- --- You're good but- Amazing, like it's to some extent too good to be true. I think it's maybe, you want to talk about the problems, like when the- --- Yeah. --- When the capture doesn't happen, when there is, yeah, user error, or a bit show the dark side of this beautiful reality. --- I'd really like expression, dark side. I think that's a good way to say it, because previously this- --- It's literally dark, yeah? --- Because sometimes it gets really, you know, there's no data which is bad. And also just a lot of data, which is poor quality, is also as bad as no data sometimes. And in the past we have tried to go this route of, we are just going to fix it, in our kind of way, and we're good at fixing stuff, but we see that it's not really scalable. And if there is an issue, a user should know this right at the capture point, because it will result in slower delivery from our side, it might result in inaccuracies, and, for example, if there's a room missing because user forgot to scan the room, I'm pretty sure photographer or agent wants to know that, right at the capture point, because then you can actually still fix it and you can learn how to do the capture properly. And this is why we also want to talk about this tracking because it's so connected with how you work with the technology, and what you do with this capture site. And like you said, it is too good to be true because there is a but, right? Even though technology is so powerful, and so capable, it still needs to be handled properly, in order for the technology and all these pipelines to work. So, this is where we kind of try to introduce this or talk about the capture cadence as well, which is required during the capture. Here's the same property really, just to illustrate, what would the data look like, if the tracking fails. And just to visually show a kind of, maybe overly unnecessary dramatic view how unreadable this data would be if the tracking fails. And I think it's pretty clear that even our algorithms would struggle with this kind of data to be run through to generate floor plans. This is why, to just repeat myself a little bit, why tracking data kind of matters a lot. And this can happen for various reasons, yeah? Go on. --- No, I just wanted to kind of repeat this the headline for this section of the webinar, is the SLAM is the silent unlocker for the AI. It's kind of, it's the first step, and if the SLAM breaks, then the AI workflows really can't work afterwards. And I think it's kind of, now the headline would make sense for the audience. --- Yeah. And so regarding this, what can go wrong during the capture, or let's say, what can affect the tracking data quality? And there's a lot of things that definitely help for tracking to be better. This is why we are quiet, when it comes to our camera, we try to kind of explain, educate, and onboard the right use of our camera and just to mention a couple of these aspects that might be new for some of our existing clients. But I think it's as interesting to hear for those who are considering, to be kind of Giraffe PRO Camera users eventually. And I think it's just interesting, interesting kind of aspect of technology, how it works, and what can, what this kind of works while considering, just to mention a couple, the technology works better because we saw it's this horizontal kind of LiDAR, if the property is kind of fixed in time, we call it kind of frozen. By that we mean you open all the doors, you try not to move objects around because if the LiDAR detects it and remembers there was like a, I don't know, a coat hanger and a couple seconds later the coat hanger is gone, it might get confused of the change in the kind of physical space. Another good tip is to make frequent scans because that also 3D point cloud data is another form of tracking that also helps with kind of positioning, and obviously the camera handling it as well. Like in the next slide, we talk about keeping the camera vertical and this is actually the key reason why we prefer the camera to be vertical, not like any other direction because of this horizontal LiDAR scanner. And needless to say, if you go steadily and smoothly, the data is just simply better. It just all comes down to, if the data, how good the data is because at the end of the line, it speeds up our kind of, post-processing and pipelines, et cetera. --- But then Janis, our main advice to our users is glide through the property. --- Yes. --- Like gliding, is the recommended attribute of movement instead of sporadic running and yeah, something like that. --- Exactly. And I understand like this might sound counterintuitive, it doesn't make sense, it might not, it might even be hard to remember during the capture, right? Because for some, speed is everything. You feel like, oh I can do a speed record and capture this property in like 10 minutes and good job. I mean you did it, but if then the assets from our side or from the post-processing comes an additional, extra one or two days, is it really worth it? So while we kind of double down on that, you get the best result if you are a little bit more calm during the capture, maybe you take an extra second or two during each scan, but then the assets and the project comes back to you much, much faster. And hence you can deliver it to your client or yourself much faster. And that actually is a much higher benefit than that couple of minutes that you could theoretically speed up during the capture if you were planning to rush it super fast. So, yeah, but it's not all that bad. Like it might sound like, hey, there's a bunch of rules that you now need to learn, criterias, do this, don't do that, technology, all the sensors, theoretically you don't actually need to know all of that. It's good to keep it in mind, especially some of the key aspects of how to handle the technology. And obviously it comes with some kind of suggestions and guides how you should use it. But another aspect is where we are helping by just improving technology on its own. And in this case, for example, this is why we're working on this ScanView, which, during the capture allows the user, the person in charge really, to see what's happening. How, how are the scans gathered, is the alignment good or bad? This is where the connection between what we show here on the screen, this mini map of the scans suddenly makes sense because oh yeah, this is, the alignment is okay, it means that I'm pretty certain that the project will go smoothly and if I see if all the rooms are being scanned and there's no major black holes on my map, I'm pretty confident that all the rooms are captured as well and I won't need to revisit the property, which would be really a painful thing to do really. And this is kind of the key element, how we see we can assist the person in charge during the capture, to make this flow much smoother as well. --- For the audience reference actually can spill the beans, but Janis himself has been designing the ScanView for, I dunno, you spent like a, it feels like a good year designing the interface and trying to figure out how to give the right feedback, how to organize the user engagement. And, so the user experience is as smooth as possible, smooth as possible. But, it's probably been a year you've been working on ScanView, like design itself no? --- I think we started it around, I think it'll be soon yeah, full year, definitely around September [2024], October we started it, and initial kind of realization was for us, yeah, how we can move away from our kind of black box approach, show more during the capture, open up kind of, the knowledge of the technology, and this feedback during the capture is what we started with. And, for sure one of them, we believe the easiest and most effective ways to work with visuals, right? To visually communicate, kind of give a preview of, like we do with the images, right? It's also just a preview. The final kind of quality and result comes after the post-processing. The same here, we show a preview of scans, preview of tracking results, which kind of is a confirmation. And this is kind of just the first step. We obviously see that there are, even this one, many ways you can improve this. This is a starting point, but we believe it is a solid foundation for the rest of the improvements that are going to come later as well. --- Yeah, nice. I think this was a really beautiful deep dive into SLAM technology. I hope that we will not receive feedback that our audience doesn't want as detailed. I hope this is actually interesting and helps to understand, yeah, how we operate, and how the technology is built and what is important. But, I think we can then maybe switch gears, and Samy-focus, run through what have been the product releases in the last 30 days, what we have released. --- Hundred percent. Yeah, so for people who've joined webinars in the past, they'll know that part of our commitment to our clients and to the photography program, is to be releasing new features to market every 30 days. And it's not just one, and it's not just a font change on the dashboard, it's something that actually makes a difference to you and your clients, and the assets that you are able to provide. So over the last 30 days, we've released Virtual Tour Sky Replacement. So what that means is, blue skies on images have been with us for some time, now they're available on those virtual tours. So when you move from the inside to the outside of the property, you're getting skies that suit the property that move with you, as you move around the virtual tour. Secondly is object removal. Again, inside the virtual tours, the tour was a massive hit using generative AI to fill in issues inside the property. So, as an example, some unwanted clutter, a dog's left a surprise in the garden. You can clear that up in real time for your client without having to pull the assets out into a different piece of software. That's now available inside the virtual tours as well. We've released our 3rd gen image AI model. So this is rapid AI, it's a massive improvement to the color cast contrast, the general editing. So it's bringing those virtual tour panoramas, which are the components that make up the visuals in line with those amazing images that we're providing to every customer. And finally, are some big improvements to Listing Spotlight. So much more variety in terms of the social media outputs and the ability for agents to add their touch to the end of the Listing Spotlight in the form of a pack shot. So information, contact information, all that type of stuff. So some big features have been requested heavily by our customers, and yeah, really happy to see those, and we will continue to do these 30 day releases in each of our webinars and on socials, et cetera. --- Same quick question. Is there- Is there any feature that's like a bigger hit from our clients? What do you hear? Is there any of the four that stands out, if you, what people have been mentioning most? --- Yeah, it's a really good question. I think for me, the Virtual Tour Object Removal has been major, right? Like it's one that agents, and photographers, and media providers can actually get their hands on in use. So like the virtual tour, sky replacement is obviously really important, because it's bringing everything, kind of to the same caliber in terms of how the property looks. But for photographers, and yeah, real estate media providers, being able to kind of edit those images and do things on behalf of their clients is really powerful. So if the agent says to the photographer, "Hey, I've just realized on the day there's this problem in the house, there's a leak we missed." Now a photographer can go and say, "Don't worry about it." Solve that out in a couple of seconds, inside the Virtual Tour as well. So I think it's been a really kind of powerful add-on tool that's a big value add to the customers, at the same time, as a kind of a huge time saver, something that we would've had to do externally. --- When I do a scan, I will always leave a camera bag in one of the shots. Like that's a mistake I can't just unlearn, there's always a camera bag sitting somewhere. I've been waiting for the object remover in the virtual tours for some time to correct my imperfect scanning process, but yeah. --- I like to put my head around the corner and check everything's okay, and then my shiny head ends up in the corner somewhere so I can get rid of that nice and quickly now as well. Brilliant. --- You should always capture with sound on the- Fantastic. So, just yeah, we mentioned earlier, pre-release for the Giraffe PRO Camera is live, and for our existing customers, as always, if you want to get involved, move on to the PRO technology and all of those amazing kind of content outputs that we've seen in previous webinars, and you can order your Giraffe PRO Camera through our customer success team. Just get in touch with us via the normal channels or via the chat bot inside your Content Studio. And for those of us who are here today, who would love to get involved, join the photography program, you can try Giraffe360's program for $123 per month and that's inclusive of five free listings, which you can use over a 60-day period. So there is still space to be a part of that early bird and pre-release section for September 2025 shipments as well. Perfect. So, I guess now we are onto the Q&A, and there have been some questions coming in. Again, if you do want to fire a question over, you can hit the QR code on screen here, fire them over via Slido, we'll get to all of the questions that have been shared with us today. So the first question I believe is, "Does Giraffe360 integrate directly with MLS listings and CRMs?" --- [Mikus] Samy, I think you're the best to answer this one. --- Yeah, a hundred percent happy to do so. So, one of the core components here at Giraffe360 is that we've wanted to be the most connected. We've said this for a while, right? We want to be the most connected technology out there in the world, and we are always open to working with CRMs, to working with MLSs. I know that the MLS integration is something that our content team is working on quite heavily at the moment. So the ability to pull data from the MLS, for Realtors, so they can really populate things, like listing spotlight with what's going on in the backend. So yes, integration, we're very open with, and we do work with tons of the major listing platforms via virtual tours, images, floor plans, everything to be deliverables on those platforms as well. --- And just to add, today our content is pre-produced to, to meet MLS's requirements. For example, MLS says, doesn't want any logos on the shot. So, in the Content Studio, you can pick from branded content or MLS ready content. So, we do not necessarily have a direct integration with all the MLSs, but we have built our solution so it fits the rules for the MLSs. And as Samy said, we're now working to build the integration so we can be integrated into literally every single MLS in America. --- Amazing. Yeah, that's a really good point. And that's across everything from the floor plan, all the way through to the virtual tours and immersive, you can create an MLS-ready copy of the content for download as well. Next question. --- Actually a unique thing, sorry, to add, a unique thing is the virtual tours because, I don't know any other company that actually adopts these MLS rules, how we have, also on the virtual tour level, not only on photography and floor plan. --- Brilliant. Good stuff, next question. How do you, I love this question actually. How do you make sure that AI generated content doesn't mislead buyers? I can kind of start, right? Because it's something that people always ask. It's like, okay, I want to present the property, how the property looks. And so, what we're doing is, we're not going in there and saying, all right, let's repaint these walls, let's kill any patches in the grass. Like what we're doing is making the property, we're enhancing the visuals of the property, as much so the viewer gets the full experience. So it's not a case of, hey, we're going to go in there and make it look unreal. It's a case of bringing out the potential in that property, in the content pieces. So it's enhancing, not editing, right? And it's the responsibility, the content created to accurately represent the space, we're capturing high quality and we're providing those high quality outputs. I hope that kind of answers the question because it's a concept of a very interesting question. --- Yeah. I can only add that, but our AI models are designed based on today's marketing requirements. And because there's, when we think about real photography today, today photography, virtual tours, everything's enhanced. We kind of don't even like the dark images. Like there has been a very heavy industry push. It's a cultural push, that the marketing image is the accepted image. And I think Samy, you nicely drew the line. We can enhance the image according to marketing standards, because people want to have the best presentation possible of the place, but you can't cross the line and suddenly without notifying a user to add things that don't exist there, like completely changing the environment. So that's the line in which when you're crossing it, you're starting to misrepresent the space. But the enhancement, that's been the industry standard. And when we started Giraffe360, we actually researched, like some 30 countries' portals, and we looked at what's the set standard for the image quality. And what's a fun fact is, it's not the same. There's, depending on the regions, different standards of how people want to perceive pictures, and so training in the AI we are using then these standards, how the markets have defined them. --- Yeah. And even like, we're going, kind of fully in on that as well, because I know from speaking with some of the product owners that they're working on styles which are as true to life as possible. So for those customers who really just want, give me the basic, kind of like depiction of the space, don't write in, and don't amalgamate, like we are even working on kind of from that level. So yeah, it's something that we are- --- Exactly, there will be users who want to rent online, who want to completely do online transactions, and that kind of a client will optimize for maybe, not as enhanced image, but it needs to be more true to life. So these editing styles are the filters we could call them. They're a real thing, but ultimately the buyer makes that decision. Our role in this process as a technology supplier is, we want to give that optionality, our media partner, photographer, applies the optionality based on the client requirements. And so, but we want to build the optionality so the market can decide. --- A hundred percent. So the next question. How do you make sure that AI doesn't get room sizes or property dimensions wrong? --- Janis. --- I Guess- ... First you do good AI. But no, actually, when it comes to property size, we're not relying on AI really. This is where we really rely on data. So for dimensional accuracy, we rely on LiDAR scans. The AI is then used in automations as a visualizing tool to draw these floor plans. I guess that's the short answer. When it comes to property accuracy, it's a big topic that we will try to talk more about in upcoming webinars and we're not- Because it is an interesting topic as well, a very technical one. But in short, I can say AI is not used, at least when it comes to dimensions. --- On the accuracy, I would only want to add, I think it's a very important nugget that Janis you threw in there, what is accuracy like? And actually it's different depending on different standards. And America's Core standard, the residential real estate is the ANSI standard, which defines the rules of how these measurements need to be applied. And, but if we use Raumplan standard or where we operate in Germany, German banking standard, then the same space gets represented differently. So, we really need to correlate the conversation with accurate data input and representation according to these different standards. And it's a bit fascinating topic, because when people, when we ask these questions from a consumer level, they usually ask from a level, but there's this core truth. There is something that's like, like an absolutely accurate floor plan, but actually it's more nuanced, it's a legal standard. But I guess where we can pride the technology today is the amount of sensors, and the information that gets collected, and we're really working hard on calibrating them, on the sensory. So, we can really push for the highest year of accuracy. --- Brilliant. And I guess the next question is definitely for you as well, Janis. So, is SLAM tracking affected by mirrors in small spaces? --- Oh, yeah, that's a good question. Well, let's say it like this. It definitely has affected, in a sense that, it causes challenges, but we have tools and instruments to compensate for that. That's where we use panoramas, and also visual support to detect these and then compensate accordingly. Small spaces can be a bit tricky. There's definitely a minimum, which is simply because of how the tracking works better if you have like, I don't know, 50 centimeters, maybe 30 centimeters offset of the camera. If it goes closer to that, we simply cut out like point cloud data at the moment in a specific radius around the camera. We do it for two reasons. One is we want to, when we deliver this point cloud data, we actually extract points that are the tripod itself because the camera in some angles sees the tripod, and we don't want the camera or tripod to be present in this data. So, definitely in some cases, some cutoff radius. And small rooms are also tricky, because you often don't do too many scans there. Obviously we really like the data and our pipeline. If you do like one to three, well optimally more than one, we really prefer two to three scans per room right? But if it's a really small space, you often can only do one. And then the importance of data quality is that much higher, and is that much more pressure on that single scan, right? But yeah, but mirrors and windows, even though it's tricky, so far, we have tools to work with that. --- Do you know Christopher Nolan's movie "Inception"? --- Yeah. --- With Leonardo DiCaprio. I feel where- --- [Janis] The corridor feels created, you mean now or another one? --- The word the world gets distorted and so it's like, I feel that sometimes with the mirrors, the AI feels that this, we are entering its Inception. So it's more, I don't know if that- --- Yeah, even, I mean technically and honestly speaking, this is also, this camera and technology is really made for residential real estate. It's- And this is this selective kind of these sensors and principles that we have tailored, and selected really works in this environment. And, like for example, if it's an amusement park where you have these mirror rooms, right? I'm pretty sure that will be a tough nugget for us. If it's just mirrors and mirrors everywhere. That might be tricky. Though, I'm not sure. I'm curious what would happen, but it's not really, there's some limits for sure how many mirrors we can handle. --- Next, if agents are expecting more than just photos, how do you get floor plans and virtual tours to them? And I think kind of the way I've understood this question is, if the agent expects more, how do you also get to the point where you're able to provide more as a media partner? --- I kind of, I guess that's the question is who's the driver for change? And there is, we represent the technology side. We are, that creates a possibility. Then the media partner is the link that then works with the real estate agent. And real estate agents get forced, probably influenced by, I would say, two main stakeholders in their business. It's the buyers, what do they require? The second one is the listing portals, and then probably the third one is the photographer, influencing what's possible. But, ultimately to some extent, the real estate agent needs to make a shift into their requirements for these things to be adopted or it's the listing portals. It's how I would think about a real life adoption of innovation. --- Yeah, and as you say, Mikus, we are providing the technology that would allow a media partner to, from that single visit, provide all of the assets that an agent could request, right? --- Yeah. And buyers have been screaming from top of their lungs, the floor plan has been the second most requested item by home buyers for a couple of years. The demand for the 3D tours is almost 10 times higher than the adoption rate. So we see that the buyers are requesting better online experience for properties, and with technology we can now give the options to produce it very simply. But yeah, the real estate agent in this case is the buyer, or the brokerages are the buyers, and their influence, and their requirement change needs to happen, and hopefully our photographers can do that. --- Brilliant. Next question, is the LiDAR able to capture outdoor photos, et cetera? And I guess, I can clarify for you Janis very briefly. The LiDAR is designed purely to capture the spatial data, right? So this is the measurements. Giraffe360 cameras can be used to create all of the other content types outside, and we recommend our customers do. I remember I saw last year, a photographer had captured a castle in France, and it was like the tennis courts, and the pond, and like walking through the woods. So, absolutely you can use the system outside, and we recommend that our clients do, right? You want to show the whole property, but there is something in there as well, Janis, potentially for you around, kind of LiDAR usage outside, and what that looks like. --- Yeah. So, when it comes to the floor plans, right, that's primarily indoors and this is where the LiDAR 3D scan really shines and is really used. When it comes to the outdoor setting, at the moment we are, we're showing like panoramas, the scans that you do outdoors, we still show them in these virtual tours, for example, right? But the scan itself, like the point cloud data, we also deliver it to the user, but it's not converted to like a floor plan in that sense. And like you pointed out, these two things are separate. Like one thing is LiDAR, which is for the point clouds and a 3D spatial data collection. And the other thing is photos. If you talk about still photos, our camera has still image capture mode, which is used both for indoor and outdoors. You can adjust the angle, you can adjust the field of view. There are a couple of settings you can do and it works for any kind of environment. And the same goes well for the panorama. It also works for both settings. But I guess where outdoors is tricky in the sense that at the moment we're not, we are not generating this kind of property, kind of, top-down blueprint, or how would you call this, that sometimes is generated for the properties, this kind of field, anything that outside of the, kind of indoors. So yeah. --- Like some sort of map. But I think an important limitation maybe for me to mention is that, that means that we've been showcasing the Gaussian splat video feature, which will be possible with the Giraffe PRO Camera. It'll be limited to the indoor spaces, because for that production the LiDAR is important. And so, we'll not be able to produce like these outside, flying beautiful views, they will be limited for the indoor spaces, definitely for the earlier releases, that will be the limitation that people need to be aware of or should be good at. --- Nice. One that we've heard before. So a remote control would be desirable, utilized via an iPhone app. Janis, I'm definitely going to hand this one to you, because I know you've explored this. --- Yes. I'm looking forward to this one as well. I have to say that at the moment we don't have that functionality yet, but we definitely have it in our kind of to-do list. Hard to say when to expect this around, probably not [2025], but we'll see. We're very busy at the moment with launching the Giraffe PRO Camera and all the pipeline et cetera. Once that's being cleared, we will revisit our pipeline and then we'll let everyone know what's next. And this is definitely among the features we have heard from our customers. There's some, I mean for those who maybe are not so familiar with the capture flow, what is really required for the user is to leave the room, while the scan is happening, right? And we have the sound notification that comes from the camera once the scan is done so you can come back at, and move the camera to the next spot, right? And sometimes you miss this sound, right, or let's say, if you want to check if everything is okay, while this scan's being done. So it's not a critical function, but we see that, yeah, it could in some corner cases be a nice thing to add. And we are definitely looking into how we could address this in the future as well. --- We'd love to hear feedback and product requests. It's just, yeah. I know the teams love it. Next up, Mikus, one you are going to like, "Will you be supplying marketing materials for photographers such as video, print and social media, email templates, that the photographer can brand for themselves." --- Yes, but I don't have the ETA. I guess this is a good shout for other Janis who lead the photographer program. We want to get the collateral ready, and help our partners with their marketing. Yes, no ETA, but yes, we definitely want to do that. --- But I think Mikus the question is also asking, will we supply materials that the photographer can brand for themselves? And so there is, yes, there is the element of, yeah, we want to put together a marketing pack that photographers can use to like, market the services that they can provide as a Giraffe360 photographer. But also maybe it's good to look at listing spotlight as a part of this as well, because every time you capture, you'll be able to generate social media, print, and email ready contents for your agents. So, that in itself is a form of marketing that you can utilize from day one of using your Giraffe360 cameras. --- Yep. I think, yeah, you covered it nicely. --- Yeah. Next up, "I currently use Matterport and CubiCasa for tour, floor plan, photos and information points. It requires stationary scans. Is Giraffe in motion drawing scans? Am I able to capture 2D photos from the product?" --- I guess I can answer- So we're also working with the stationary scans, and also for the stationary image capture. The reason being, you just get so much more light and exposure to work with, to get really good visual assets. And then when it comes to the scan accuracy, for now, even though we also collect some of it in the transit, when you go from A to B, we are actually using only for the final data. We're only using these scans because they're just so much more accurate. But when it comes to the 2D photos, the best quality is if you use a tripod like our camera and shoot the image at the property, right? Though there is an option for like, extract from the panoramas also through a, like a screen view or let's call it like that, through our dashboard tool as well. It's already available there and has been for a while. So you could technically get an image out from the panorama though in my opinion, the image quality is that much better if you do a 2D image shoot, right away with a camera, our camera in that sense. --- Yeah. Perfect. Final question for today. So, who owns the final product? Is it downloadable for historical purposes? And I guess this is the content that's generated during the capture process. --- [Mikus] Samy, do you want to answer it? --- Yeah, I mean, yeah, whoever has the Giraffe360 camera subscription is the person that owns the final asset. So the virtual tours, floor plans, and any downloadable content is yours. You own the rights to that content. All of the panoramas that make up the virtual tours can be downloaded, et cetera, et cetera. The only kind of caveat is that the virtual tours themselves are hosted on our infrastructure. So yeah, the virtual tours are accessible via a link, and yeah, you need a subscription for those tours to be live, but you own, essentially the intellectual property rights. And we will never use our client's data, even for marketing purposes, without asking the client in advance. We only use the captured data, anonymized to feedback and train the systems internally. So yes, I guess that is us for today. --- Thanks everyone who joined in, thanks. --- Yeah, sorry- somebody asked if the previous webinars are available, and yes, they are available on our YouTube profile and there's tons of information, and kind of like amazing concepts that we shared around the drone footage, the release of the Giraffe PRO Camera and so, yeah, lots of really exciting stuff in those as well. --- Yeah, if three of us haven't bored you for the last hour or so, then you can go and get three more hours of us, kind of going down similar rabbit holes. Yeah, yeah. --- Brilliant. Well thank you very much today. Thank you Mikus and Janis for joining again and for everybody who's listened, and yeah, can't wait for the next one. --- Thank you, ciao. |

||

| Post 1 • IP flag post | ||

Pages:

1